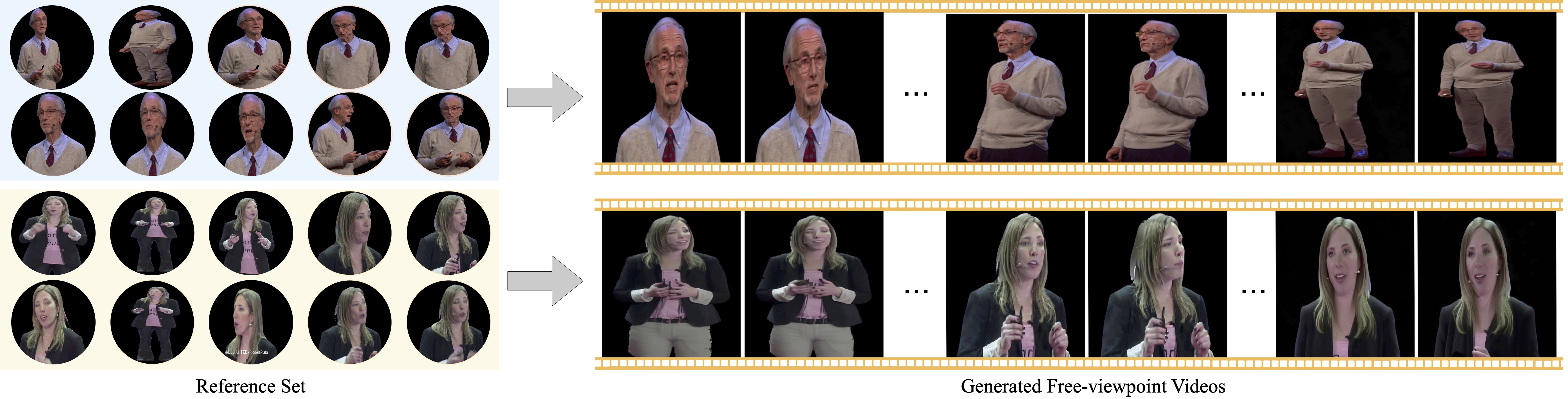

Diffusion-based human animation aims to animate a human character based on a source human image as well as driving signals such as a sequence of poses. Leveraging the generative capacity of diffusion model, existing approaches are able to generate high-fidelity poses, but struggle with significant viewpoint changes, especially in zoom-in/zoom-out scenarios where camera-character distance varies. This limits the applications such as cinematic shot type plan or camera control. We propose a pose-correlated reference selection diffusion network, supporting substantial viewpoint variations in human animation. Our key idea is to enable the network to utilize multiple reference images as input, since significant viewpoint changes often lead to missing appearance details on the human body. To eliminate the computational cost, we first introduce a novel pose correlation module to compute similarities between non-aligned target and source poses, and then propose an adaptive reference selection strategy, utilizing the attention map to identify key regions for animation generation.

To train our model, we curated a large dataset from public TED talks featuring varied shots of the same character, helping the model learn synthesis for different perspectives. Our experimental results show that with the same number of reference images, our model performs favorably compared to the current SOTA methods under large viewpoint change. We further show that the adaptive reference selection is able to choose the most relevant reference regions to generate humans under free viewpoints.

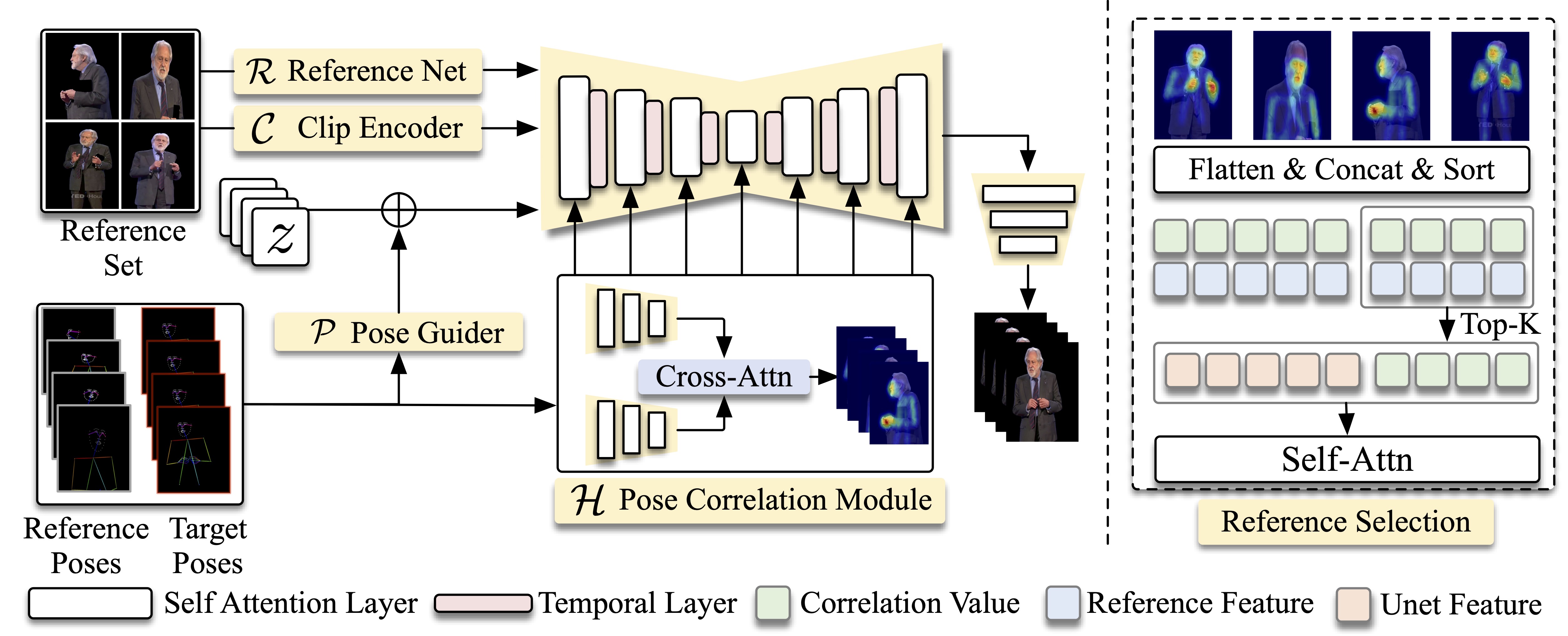

The illustration of our framework. Our framework feed a reference set into reference Unet to extract the reference feature. To filter out the redundant information in reference features set, we propose a pose correlation guider to create a correlation map to indicate the informative region of the reference spatially. Moreover, we adopt a reference selection strategy to pick up the informative tokens from the reference feature set according to the correlation map and pass them to the following modules.

To address this limitation and advance research in this area, we introduce a novel multi-shot TED video dataset (MSTed), designed to capture significant variations in viewpoints and camera distances. TED videos were chosen for their diverse real-world settings, professional quality, rich variations in human presentations, and broad public availability, making them an ideal foundation for a comprehensive and realistic multi-shot video dataset. MSTed dataset comprises 1,084 unique identities and 15,260 video clips, totaling approximately 30 hours of content.

@article{hong2024fvhuman,

author = {Hong, Fa-Ting and Xu, Zhan and Liu, Haiyang and Lin, Qinjie and Song, Luchuan and Shu, Zhixin and Zhou, Yang and Ceylan, Duygu and Xu, Dan},

title = {Free-viewpoint Human Animation with Pose-correlated Reference Selection},

journal = {CVPR},

year = {2025},

}